Introduction

In my last post, I ported the Block Sudoku game to Javascript so I could use Phaser to develop a user-friendly playable version of the game. In this section, we're going to go back to the python version and attempt to build it into OpenAI's gym environment. OpenAI provides a pretty decent starting tutorial here, but since it lacks quite a bit of information (as well as being outdated), I ended up scouring the environments that Gym ships with to figure out how to tie Block Sudoku into the AI Gym platform.

Converting Block Sudoku for AI Gym

I won't go into the specific folder structure needed to make an AI Gym environment, but if you're curious you can check out the source code for my OpenAI Gym Environment on my github. The main file we'll be working with is under gym_blocksudoku/envs/blocksudoku_env.py.

Importing the class we built earlier for the game is straightforward. We now just need to connect it to our BlockSudoku class. Two important things to note are the action_space, and observation_space variables which will be the shapes of the action and observation space respectively.

def __init__(self):

self.game = BlockSudokuGame()

self.factory = BlockFactory()

# Generate first three blocks and game board

self.main_board = self.game.new_board()

self.block_queue = self.factory.generate_3blocks()

# reset total score

self.is_running = True

self.total_score = 0

self.current_steps = 0

self.max_steps = 2000

# action space

self.action_space = spaces.Discrete(3*9*9)

# observation space

self.observation_space = spaces.Box(

low=0, high=1, shape=(15, 15, 1), dtype=np.uint8

)

The Action Space

Since we have 3 blocks (at most) in our queue, and 81 different tiles that block could go in, our action space is therefore 243. This is a significantly larger action space than many of the examples in OpenAI Gym, but I'm hoping it won't be too much of a problem. If anything, it may just complicate training our network later. Given a number between 0 - 242, the environment will decode this into a block on the queue and it's respective x and y position. Below are such examples of this.

| Action | Block Queue | X Position | Y Position |

|---|---|---|---|

| 0 | 0 | 0 | 0 |

| 9 | 0 | 0 | 1 |

| 88 | 1 | 7 | 0 |

| 242 | 2 | 9 | 9 |

It's important to note that certain actions are impossible. We are not able to place a block if it intersects another block already on the board. We also cannot place a block from queue position 2 if we only have 1 block left in the queue. In these cases, we'll mark the action as invalid and return a negative reward. Conversely, the only way to gain positive reward is to clear lines off the board.

The Observation Space (Game state)

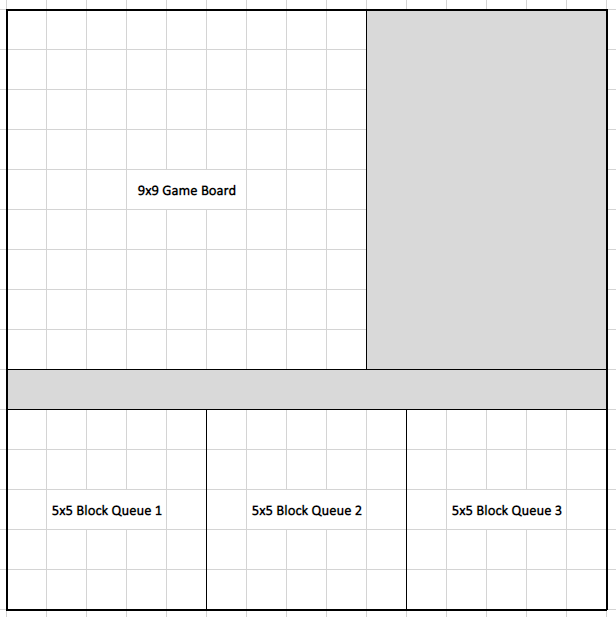

For the state of the game, we're going to be arranging it as a 15x15 array with the board on top and blocks in queue on bottom. For every step of the environment (after we receive an action), this state will be returned.

Our first agent - Random Agent

Now that we have OpenAI gym all set up with our project, the first "AI" agent we'll subject our game to is random agent. As the name suggests, Random Agent will randomly pick an action from the action state. It doesn't really care about the next state, nor will it act upon rewards. Implementing Random Agent is pretty straightforward:

import gym

from gym_blocksudoku.envs.blocksudoku_env import BlockSudoku

import numpy as np

import random

env = gym.make('blocksudoku-v0')

env.reset()

for _ in range(10000):

env.render()

# Take a random action

t = np.zeros((3,9))

t[0,random.randint(0,2)] = 1

t[1,random.randint(0,8)] = 1

t[2,random.randint(0,8)] = 1

t

state, reward, done, _ = env.step(t)

print('reward: ' + str(reward))

if done:

print('Game is finished')

print('Total Score: ' + str(env.total_score))

break;

env.close()

The results of running Random Agent are as follows:

| Trial | Score |

|---|---|

| 1 | 3 |

| 2 | 2 |

| 3 | 0 |

| 4 | 0 |

| 5 | 0 |

| 6 | 0 |

| 7 | 1 |

| 8 | 0 |

| 9 | 0 |

| 10 | 0 |

Random Agent got pretty lucky the first two times, and then proceeded to score nothing until Trial 7. Since none of the scores even came close to the lowest score (5) in the baseline human benchmarks, we can conclude that randomly choosing positions isn't a very effective strategy.

Trying to solve BlockSudoku for yourself

I published the OpenAI gym implementation of BlockSudoku to PyPi, and if you'd like to try to design an agent for it, you can simply use pip to install it.

pip install gym-blocksudoku

Next up, building a smarter algorithm to help solve the game for us!