Introduction

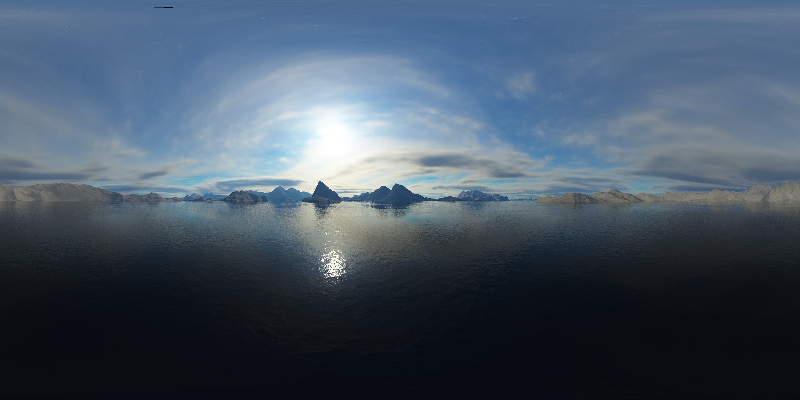

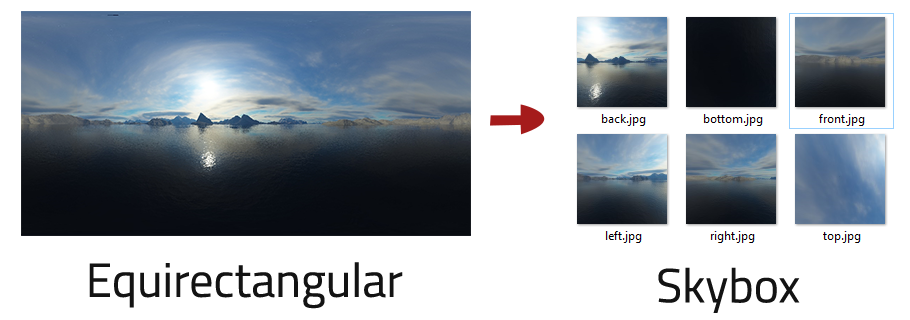

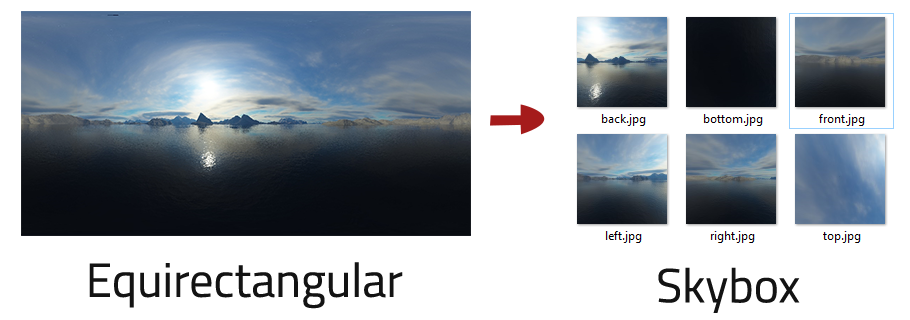

For the past few weeks, I've been working on a fun little project called the Environment Map Projector that converts formats such as Skyboxes to other formats such as Equirectangular or Hemispherical. Note that although a few tools are already available to do this task, some are locked behind either commercial software or paid assets in the Unity Store. The few Python implementations on GitHub slow to a crawl if fed any image larger than 2k.

Because documentation for the overall theory of the conversion process is spotty, much of this article is dedicated to describing the approach I took to build this tool. I hope it helps individuals looking for more than just a code dump.

That said, if you are looking for just the implementation or application, you can find it on GitHub.

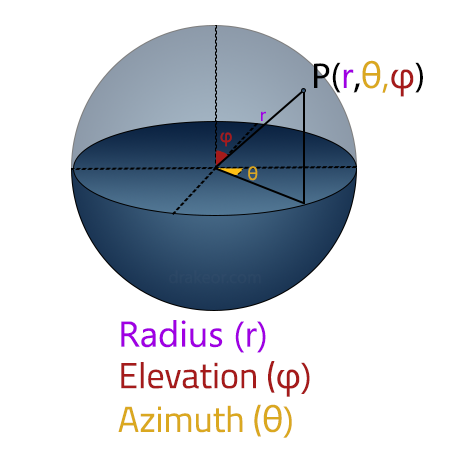

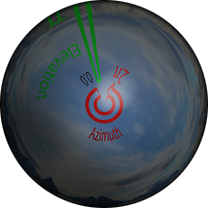

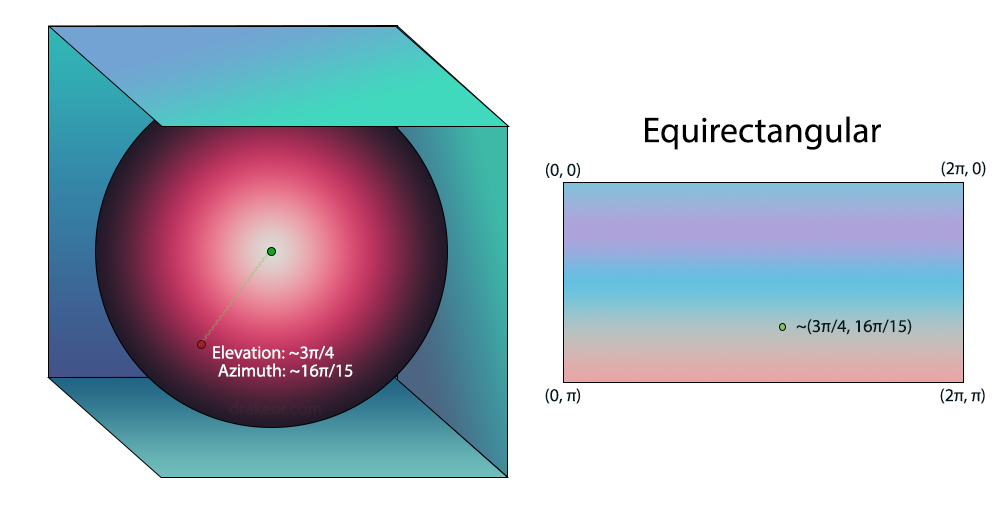

Spherical Coordinates

Before we move straight into the projections, it's essential to establish some background knowledge of the spherical coordinate system. Anyone whose taken calculus or physics may tell you that sometimes switching coordinate systems will simplify a problem greatly. This problem is one such example.

Unlike the standard Cartesian coordinate system, which specifies the position of a point as the distance from three perpendicular planes (x,y,z), spherical coordinates specify the position of a point in terms of radius (r), azimuth angle (θ), and elevation angle (φ).

Two important notes. The azimuth is restricted to the range of [-π, π], with the size of the range being 2π. The elevation is restricted to the range of [-π/2, π/2], with the size of the range being π.

Note: Physics and mathematics swap the standard conventions of what (θ) and (φ) represent. To avoid ambiguity, I will take the Mathworks approach and use Azimuth and Elevation instead for the rest of this post. Furthermore, even though the range of both terms is commonly listed as [0, 2π] and [0, π], the range of the atan2 function is [-π, π] in C++/MathWorks and hence is why we choose to center the midpoint of the range on 0 instead.

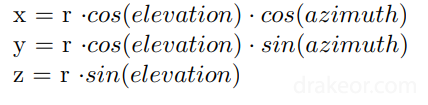

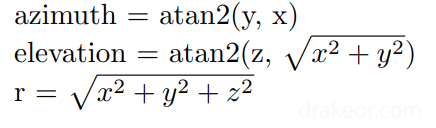

Swapping between cartesian (x,y,z) and spherical coordinate systems (r,θ,φ) can be done with the following set of equations:

Spherical to Cartesian:

Cartesian to Spherical:

(Source)

Note: The conversion equations can also be listed like so (Note the use of sin instead of cos and the arguments for atan2 in elevation being swapped for the inverse transformation). It shouldn't matter which one you choose as long as you are consistent.

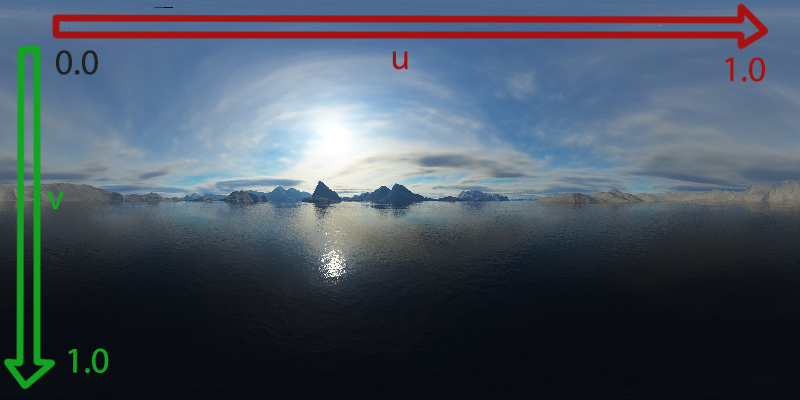

UV Textures

UV Textures use u and v (instead of x and y) to describe the coordinates of a specific point on that texture. The vital thing to note is that UV coordinates are typically restricted to the range of [0,1].

Equirectangular Projections

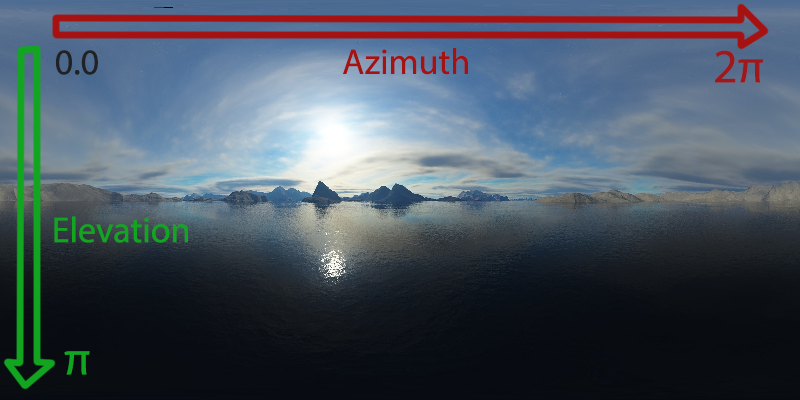

With that background information out of the way, let's move straight into our first application of the spherical coordinate system: the Equirectangular Projection.

Looking at each pixel in our UV texture, we represent its position along the u-axis as an azimuth angle on the unit sphere. We represent the pixel's position along the v-axis as its elevation angle on the unit sphere.

Since the size of the range of the azimuth angle (2π) is double that of the elevation angle (π), we prefer a texture where the width is 2x that of the height.

Now we just need to convert from the UV domain of [0, 1] to [-π, π] along the u-axis and [0, 1] to [-π/2, π/2] along the v-axis. This will give us a 2d array of all the points on the sphere in spherical coordinates. Note that since we're working with the unit sphere, the radius will always be 1. We can use the following transformations to achieve this. The pseudocode for this looks like this:

for each pixel in texture with position (x,y):

u = x / textureWidth

v = y / textureHeight

azimuth = (u * 2pi) - pi

elevation = (v * pi) - (pi/2)

radius = 1

// ... use this information to build a skybox.

Putting it into actual code for this looks something like the following:

for(int x = 0; x < texture->width; x++)

{

for(int y = 0; y < texture->height; y++)

{

// Grab pixel value from the texture

unsigned int pixelData = texture->GetPixelValue(x, y);

// Convert to UV coordinates. range: [0,1]

float u = (float)x / (float)(texture->GetWidth() - 1);

float v = (float)y / (float)(texture->GetHeight() - 1);

// Convert to range for azimuth [-pi, pi]

float azimuth = (u * 2.0f * pi) - pi;

// Convert to range for elevation [-pi/2, pi/2]

float elevation = (v * pi) - (pi/2)

// ... use this information to build a skybox later

}

}

Full source files are available as EquirectangularProjection.cpp and CoordContainerSpherical.cpp on GitHub.

Casting the pixels directly to coordinates on the sphere gives us the following top half of the sphere (We are positioned above looking down onto the sphere).

Modeling the Skybox

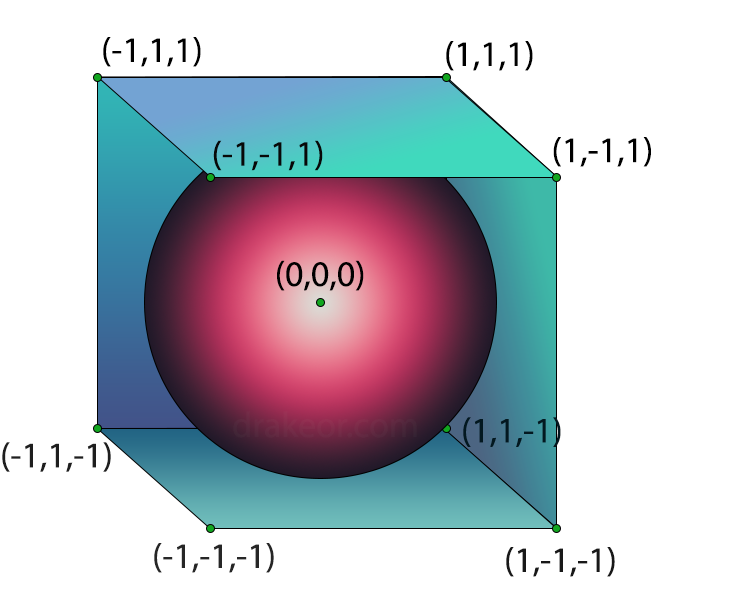

A Skybox is represented by a simple cube. Six different textures correspond to each side of the cube. Although a unit cube would work totally fine, it makes the calculations much easier if we position the cube in a way to encapsulate our unit sphere perfectly.

Between our 6 surfaces, we'll have a surface at x = {-1,1}, y={-1,1}, and z={-1,1} with the length of each side of the cube as 2. Consequently, each Skybox texture has to have an equal width and height.

Conversion from Equirectangular to Skybox

Pseudocode

Here's the high-level pseudocode for my general approach to this problem. I'll be referring back to it often throughout the rest of this post.

1) for each face of the cube

2) for each pixel on that face of the cube

3) grab location of pixel in cartesian space (x,y,z)

3) draw a vector from origin (0,0,0) to point (x,y,z) on cube where that pixel is

4) determine the point (x',y',z') of intersection on the unit sphere

5) convert point (x',y',z') to spherical coordinates (azimuth, elevation)

6) look up pixel on equirectangular texture based on azimuth and elevation.

7) set the pixel for the face of the cube to the equirectangular texture

UV Texture to Cartesian Coordinates

Let's first address points (1), (2), and (3). Our ultimate goal is to take in a UV texture point on a particular face of the cube and output a cartesian coordinate.

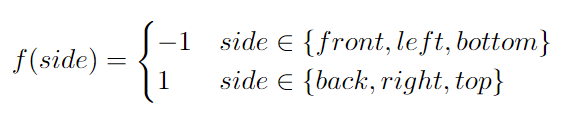

Due to how our cube is defined, one axis will always be fixed at -1 or 1 along a chosen face. Let's first build a function to return either -1 or 1 for the fixed axis based on face:

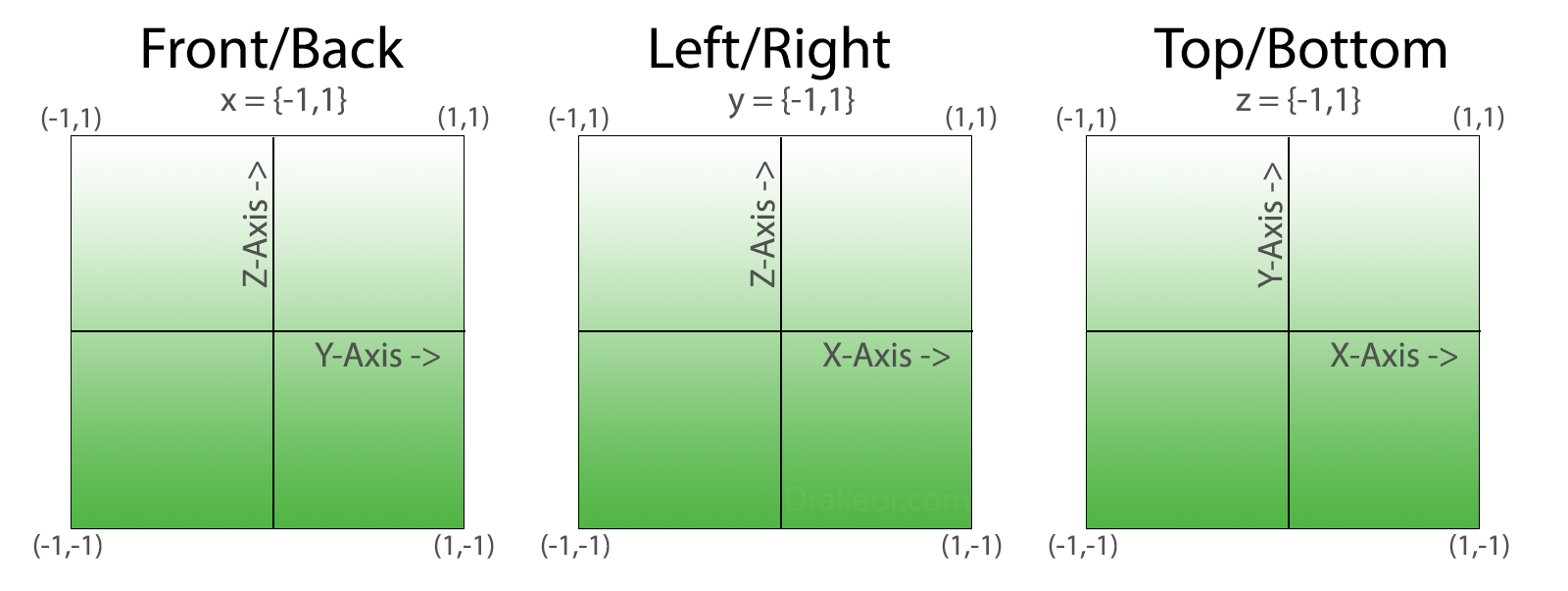

While one axis is fixed, the other two directly relate to the UV coordinates. How they relate depends entirely on what side we're currently looking at.

For example, let's first look at the left side. Using our function f(side) above gives us f(left) = -1. The left side is fixed on the y-axis and so y=-1. The x-axis corresponds to u and the z-axis corresponds to the v coordinate. Here's a convenient visualization for the other two sides:

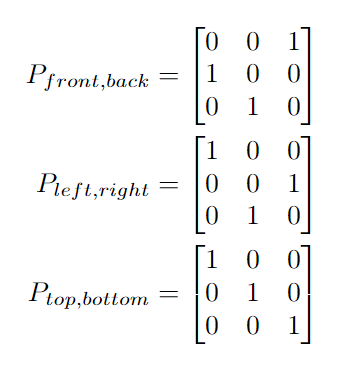

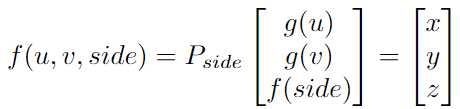

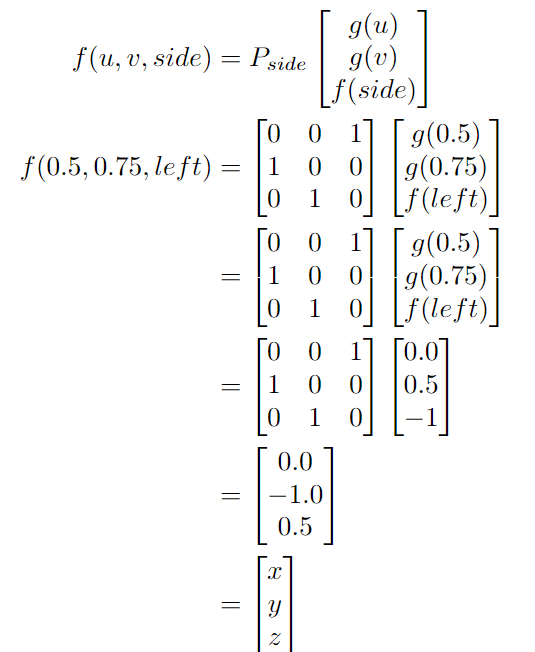

To map a vector of (u,v,f(side)) to the correct axis of (x,y,z), we can translate each of these three images into a permutation matrix based on which side is being observed.

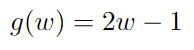

The last function we need converts from the range of the UV texture [0,1] to the range [-1,1]: the min/max values that the resultant cartesian coordinate can be. We'll need to wrap our u and v in this function to give us our input vector of (g(u),g(v),f(side)).

Tying this all together, we can finally build a complete function that takes in both a side and UV coordinate and outputs a specific cartesian coordinate:

Here's an example of converting the UV point (0.5, 0.75) on the left side to cartesian coordinates.

Let's see if this answer makes sense. The y-axis position is -1 as expected. The x-axis position is 0 because we picked the midpoint of u (0.5). The z-axis position is 0.5, about 75% of the way from -1 to 1 as expected.

Note: The process of converting back from cartesian coordinates to uv-coordinates is nice too. Function g is one-to-one and invertible (just solve for w above). All the permutation matrices defined are invertible, with two of the matrices being involutory (they equal their inverse). This will be useful in a later post for converting from a skybox to Equirectangular.

Let's move right into the code implementation. Although nice in theory, in reality, matrix operations are fairly expensive. Instead of a permutation matrix, we can do our mapping more efficiently by simply copying the values of g(u), g(v) and f(side) to the correct cartesian coordinate.

// ......

template<typename T>

Point3d<int32_t> CoordConversions<T>::SideToCoordMap(SkyboxSurf side)

{

switch(side)

{

// Top-Bottom plane is simple xy plane. z = {-1, 1}.

case TopSurf:

case BottomSurf:

return Point3d<int32_t>({0, 1, 2});

// Left-Right is the xz plane. y = {-1, 1}.

case LeftSurf:

case RightSurf:

return Point3d<int32_t>({0, 2, 1});

// Front-Back is the yz plane. x = {-1, 1}.

case FrontSurf:

case BackSurf:

return Point3d<int32_t>({2, 0, 1});

}

return Point3d<int32_t>({0, 1, 2});

}

template<typename T>

T CoordConversions<T>::SideToConstVal(SkyboxSurf side)

{

switch(side)

{

// These are on positive axis

case BottomSurf:

case BackSurf:

case RightSurf:

return 1.0f;

// These are on negative axis

case TopSurf:

case LeftSurf:

case FrontSurf:

return -1.0f;

}

return 1.0f;

}

// .....

// Output all six images

for(int k = 0; k < skyboxImgs.size(); k++)

{

Point3d<int32_t> coordMap = CoordConversions<T>::SideToCoordMap(static_cast<SkyboxSurf>(k));

T constVal = CoordConversions<T>::SideToConstVal(static_cast<SkyboxSurf>(k));

for(int i = 0; i < skyboxImgs[k].GetWidth(); i++)

{

for(int j = 0; j < skyboxImgs[k].GetHeight(); j++)

{

T u = (T)i / (T)(skyboxImgs[k].GetWidth() - 1);

T v = (T)j / (T)(skyboxImgs[k].GetHeight() - 1);

// Convert uv position [0,1] to a coordinates

// on one of the sides [-1,1]. Note that we treat the skybox as a unit cube.

u = 2.0f * u - 1.0f;

v = 2.0f * v - 1.0f;

// Convert from domain [0,1] to [-1,1] on u and v and add in the constant value

std::array<T, 3> uvCoord = {u, v, constVal};

// Transform uv to cartesian coordinates

// Convert f:[u,v,c] -> [x,y,z]

Point3d<T> cartCoord;

cartCoord.x = uvCoord[coordMap.x];

cartCoord.y = uvCoord[coordMap.y];

cartCoord.z = uvCoord[coordMap.z];

// ... do something with cartCoord later

}

}

}

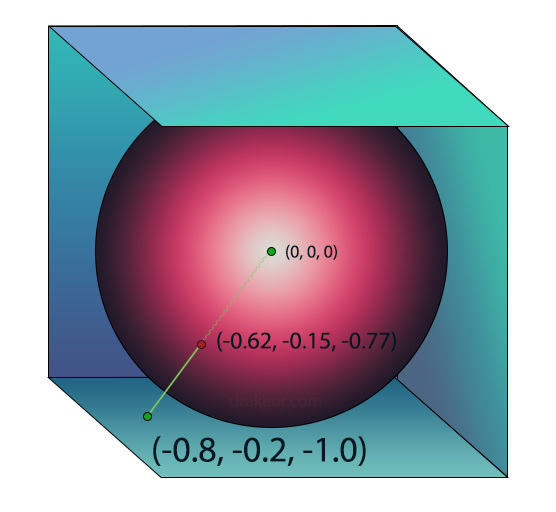

Finding the point of intersection on the unit sphere

Points (4) and (5) of our pseudocode are much more straightforward.

To figure out what to render at each pixel on the cube, we'll first draw a segment from the origin to the pixel on the cube and grab the point of intersection on the unit sphere. The intersection on the unit sphere will be translated to the appropriate spherical coordinate on the UV texture for the Equirectangular projection.

Since we chose to use the unit sphere for everything, the point of intersection is easy to compute as the radius will always be one on the unit sphere. This means that simply considering the line segment above as a vector and normalizing it will give us the point of intersection.

Converting the point of intersection to Equirectangular Coordinates

The final piece of the puzzle (pseudocode points (6) and (7)) is to convert the point of intersection to spherical coordinates. For this, we can use the Cartesian to Spherical Coordinates calculation that was defined near the beginning of this post. Disregarding radius (since it will be 1 anyways), we can then just use the azimuth and elevation values to directly look up the pixel value on the equirectangular texture. This equirectangular pixel data is the data we should use for the point on the skybox we just drew a vector to.

As an optimization, if we are just converting directly to spherical coordinates anyway, we don't need to normalize the vector first. We can simply set the radius term to 1 after conversion, giving us the point of intersection (remember the sphere is a unit sphere). Note elevation and azimuth remain the same whether or not the vector is normalized, it is only the radius that changes.

We repeat this process for every pixel on all six sides of our cube and the result is 6 fully populated skybox textures we can save and use in whichever application (or game) we need.

Our final code implementation (modified from above) now looks like the following.

for(int k = 0; k < skyboxImgs.size(); k++)

{

Point3d<int32_t> coordMap = CoordConversions<T>::SideToCoordMap(static_cast<SkyboxSurf>(k));

T constVal = CoordConversions<T>::SideToConstVal(static_cast<SkyboxSurf>(k));

for(int i = 0; i < skyboxImgs[k].GetWidth(); i++)

{

for(int j = 0; j < skyboxImgs[k].GetHeight(); j++)

{

T u = (T)i / (T)(skyboxImgs[k].GetWidth() - 1);

T v = (T)j / (T)(skyboxImgs[k].GetHeight() - 1);

// Convert uv position [0,1] to a coordinates

// on one of the sides [-1,1]. Note that we treat the skybox as a unit cube.

u = 2.0f * u - 1.0f;

v = 2.0f * v - 1.0f;

// Convert from domain [0,1] to [-1,1] on u and v and add in the constant value

std::array<T, 3> uvCoord = {u, v, constVal};

// Transform uv to cartesian coordinates

// Convert f:[u,v,c] -> [x,y,z]

Point3d<T> cartCoord;

cartCoord.x = uvCoord[coordMap.x];

cartCoord.y = uvCoord[coordMap.y];

cartCoord.z = uvCoord[coordMap.z];

PointSphere<T> sphericalCoord = cartCoord.ConvertToSpherical();

Pixel pixelData = equirectTexture->GetPixelAtPoint(sphericalCoord.azimuth, sphericalCoord.elevation);

skyboxImgs[k].SetPixel(u, v, pixelData);

}

}

}

Full source files are available as SkyboxProjection.cpp on GitHub.

Conclusion

This was a pretty lengthy post, but I hope someone finds some use out of this. Note that there are probably different approaches to solving this problem and some are probably a lot more efficient than mine.

To avoid this post being too long I'll make a part two that details my approach from converting from a skybox back to an Equirectangular projection in a later post.